Large Language Model

Large Language Models (LLMs) are intelligent, they can understand the meaning of user's input and generate appropriate response. Hongtu can help developer easily integrate LLM with other part of their application, or calling third party APIs.

Prerequisite

Requires Login

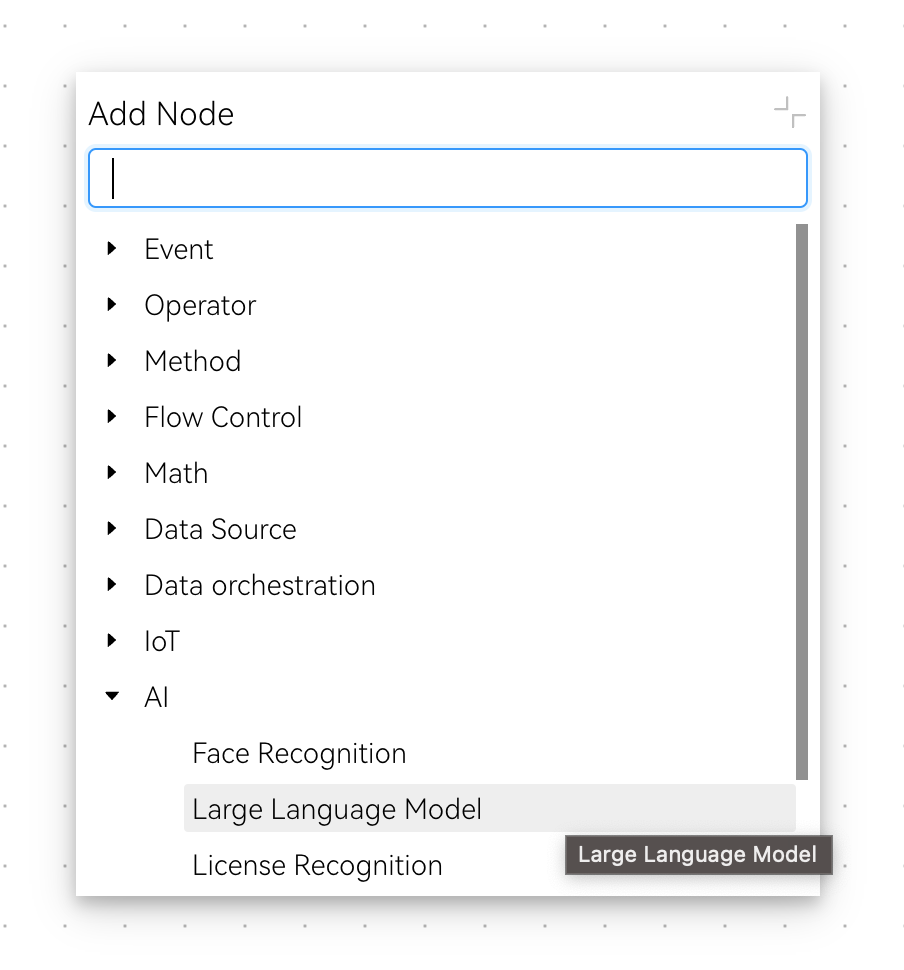

Add Node

Right-Click anywhere on canvas, select AI/Large Language Model

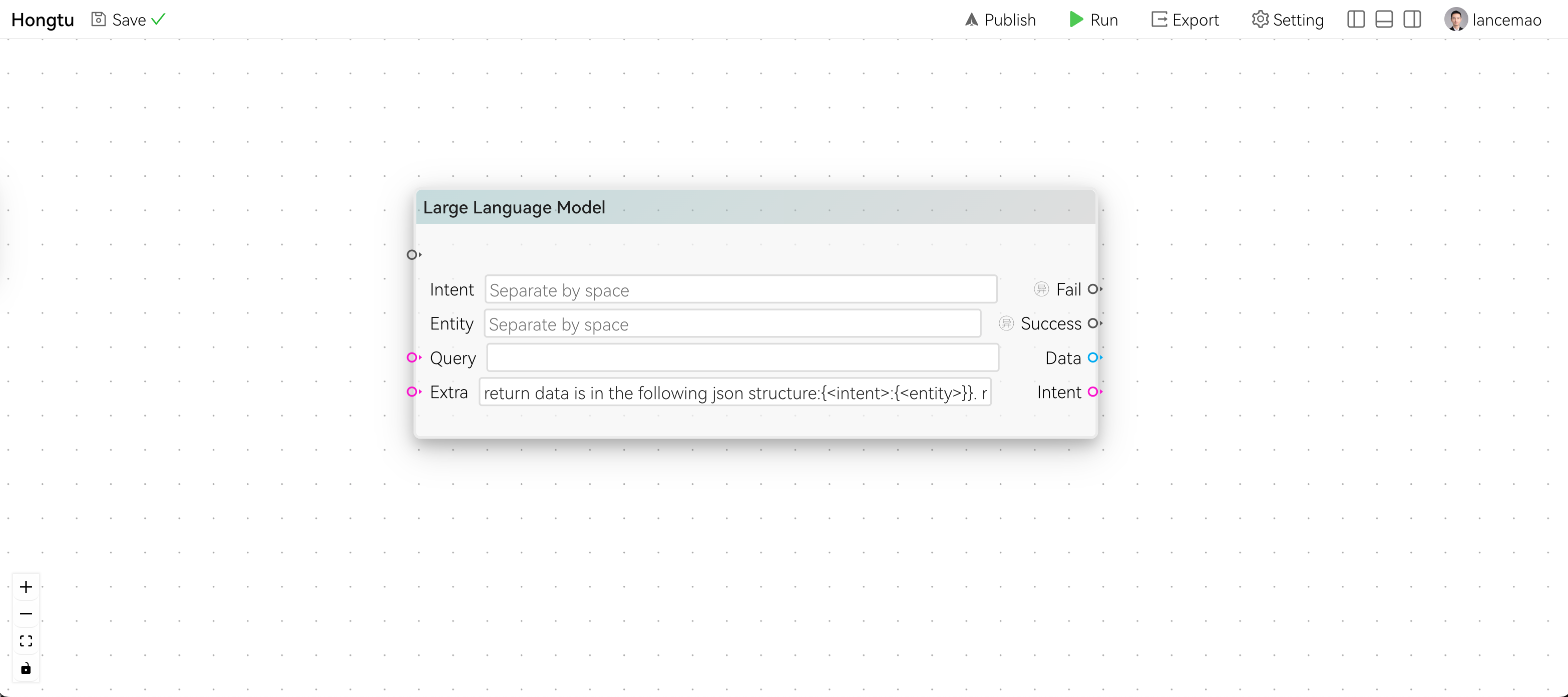

After adding the node, it looks like this:

On the left, it has the following input structure:

IntentIntent is used to classify user inputEntityEntity is used to identify the object of the Intent. e.g. Send email to Bob,Send Emailis the Intent,Bobis the Entity.Queryis the actual user's input.ExtraAny additional information that can help LLM return correct result.

TIP

As a whole, these four parts are called Prompt

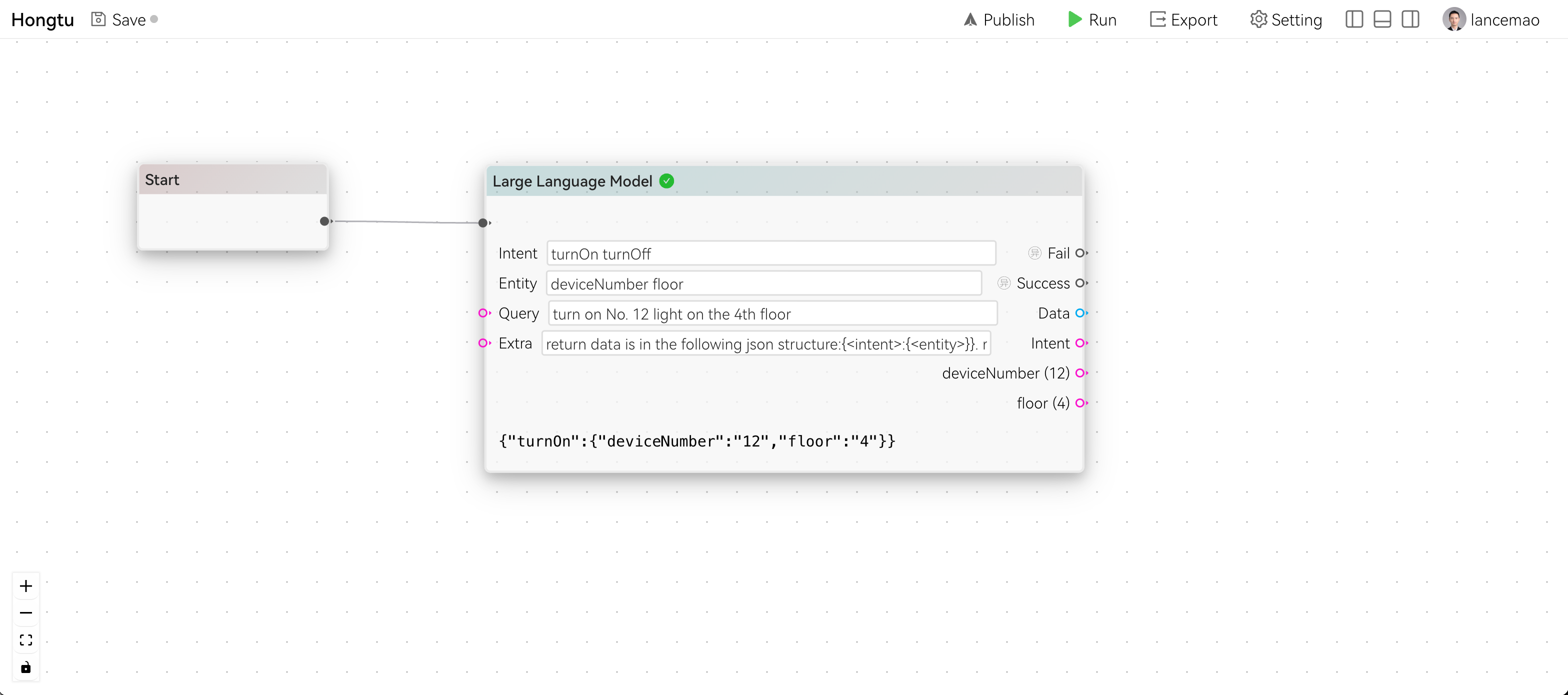

Suppose we want to switch on or off some light, let's see how we can implement that using LLM:

in this example, we tell LLM that there are two intents: turnOn and turnOff. We also tell LLM that there are two entities: Floor and deviceNumber. We further add the following extra instructions:

- return data is in the following json structure:{<intent>:{<entity>}}.

- return only the json without any other helping words- Extra 1:tells LLM the JSON structure

- Extra 2:without this, LLM might return other texts alongside of our JSON

Note that the output on the right contains:

Datathe raw data returned by LLMIntentthe recognized intentEntitya dynamic list of the recognized entity

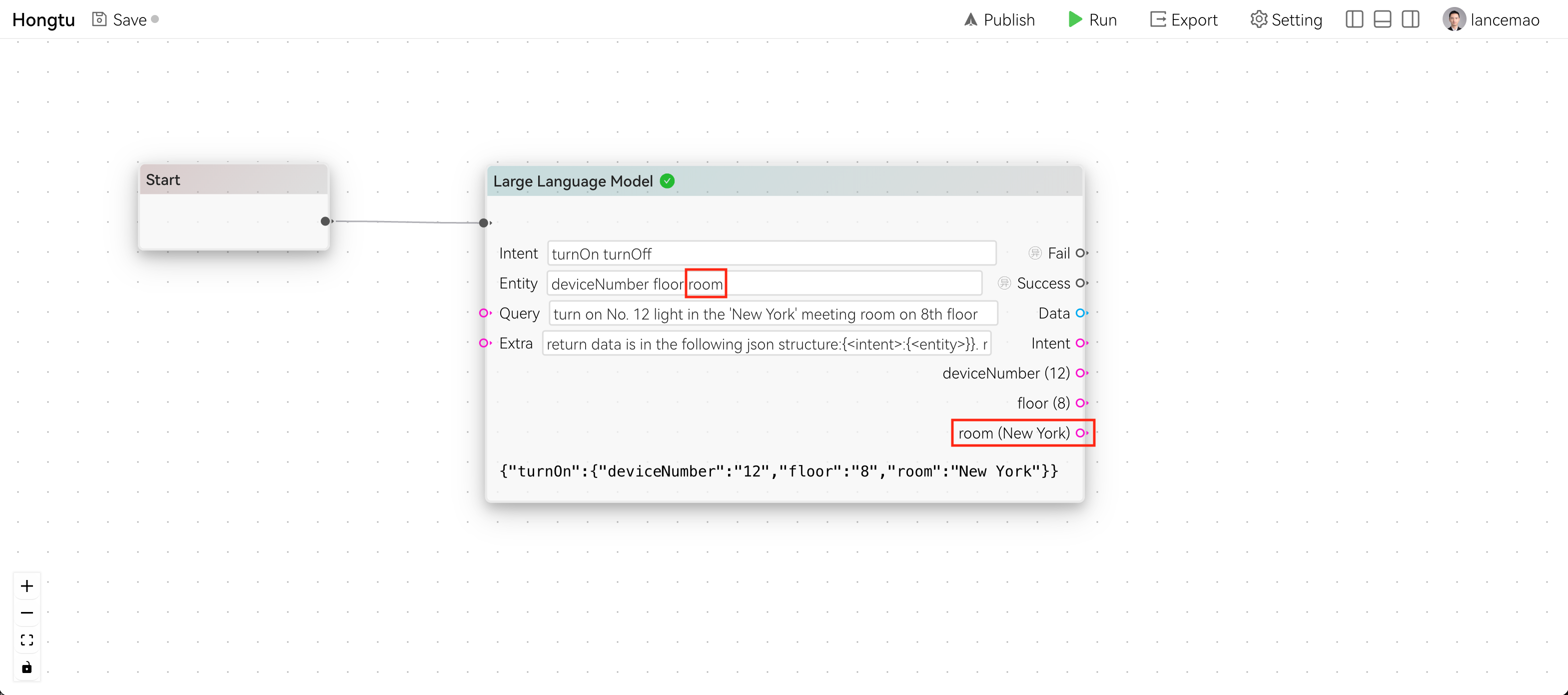

Extend Intent & Entity

Since LLMs are generative, so without any training, we can add more intents and entities directly to the prompt:

More Scenarios

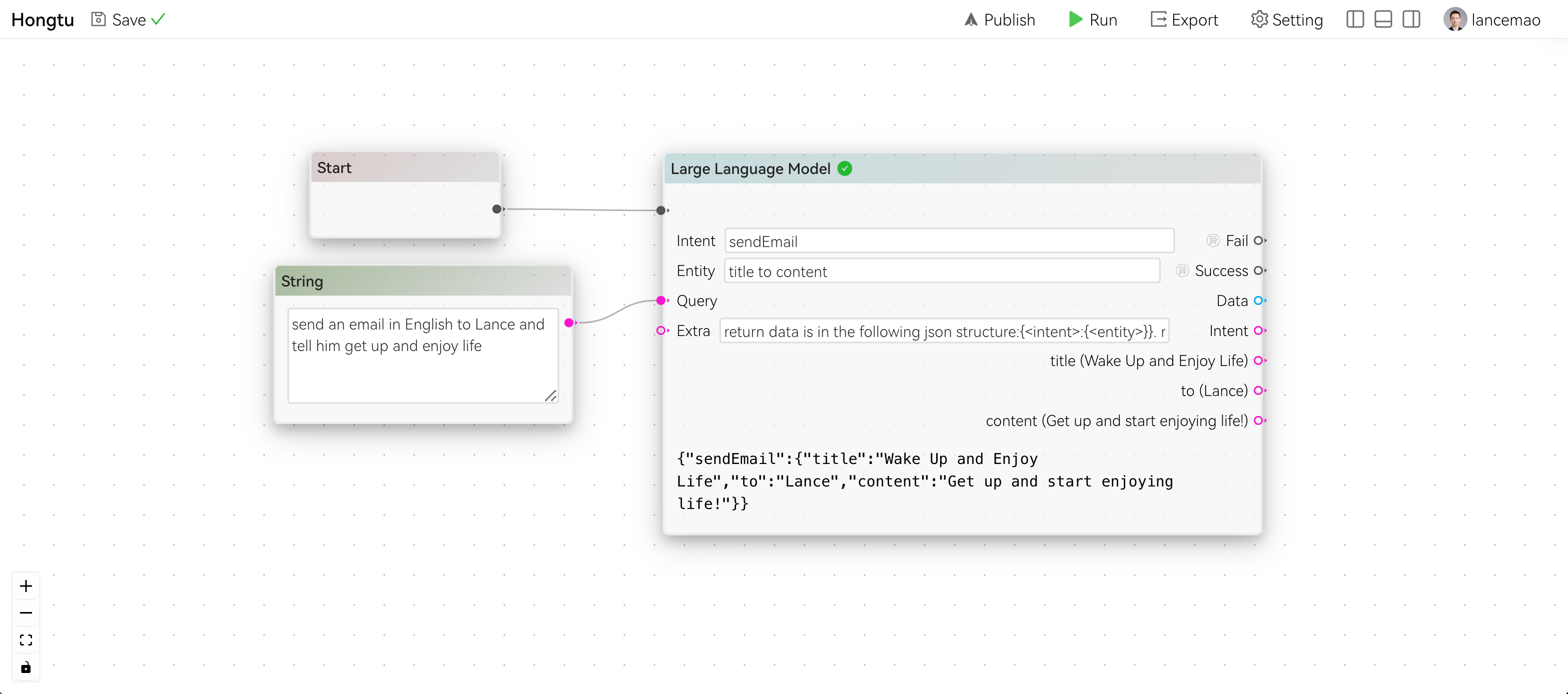

Using this Intent & Entity pattern, we can easily implement other scenarios:

Multistep

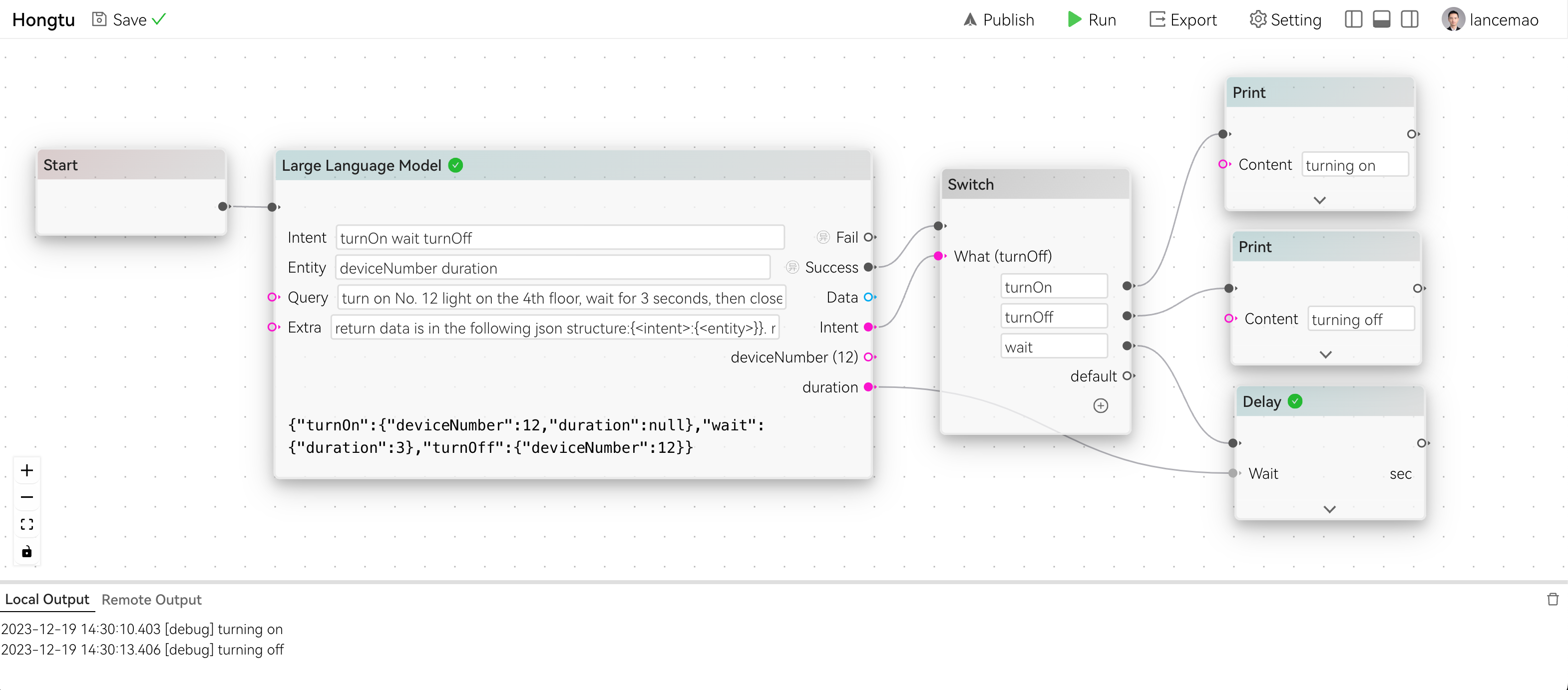

LLMs support multistep, instead of returning one single Intent, they can return a list of Intents, each may contain a list of Entities. For example, we can ask LLM to turn on light, wait for some time, then turn it off. Hongtu will break the response and flow accordingly. Here is the query we give to LLM:

turn on No. 12 light on the 4th floor, wait for 3 seconds, then close itHere is the Hongtu we draw:

We use Switch to branch our flow according to the Intent, we can simply focus on How to handle the specific intent and leave the rest to LLM and Hongtu.

Amazing

- When we say close it, not only LLM translates it to

turnOff, but also it returns the correctdeviceNumbereven though we didn't specify it. It knows the context. - We can also confirm that we have actually waited 3 seconds by checking the logs in the console at the bottom.